This week, Brent, Tara, Erik, and Richie discuss troubleshooting port blocking, page life expectancy issues, problems with turning off CPU schedulers, coordinating two jobs across servers, adding additional log files to an almost-full partition, tips for getting a new SQL Server DBA job, using alias names for SQL Servers, database going into suspect mode during disaster recovery, SQL Constant Care “Too Much Memory” warning, index operational statistics, running newer versions of SQL Server with databases in older version compat mode, and more!

Here’s the video on YouTube:

You can register to attend next week’s Office Hours, or subscribe to our podcast to listen on the go.

If you prefer to listen to the audio:

Enjoy the Podcast?

Don’t miss an episode, subscribe via iTunes, Stitcher or RSS.

Leave us a review in iTunes

Office Hours Webcast – 2018-08-08

We can’t connect with Telnet. Now what?

Brent Ozar: First up is a mysterious VRP. VRP says, “Frequently, we have an issue of not being able to connect to port 1433. When we check with Telnet…” Oh, I love – Grandpa VRP, you’re with me in remembering to use Telnet… “Unable to connect to SQL Server, no ports being blocked from antivirus. After restarting the SQL Server services, we’re able to connect using 1433. What should we do to troubleshoot this next?

Erik Darling: Turn on the remote DAC.

Brent Ozar: Elaborate.

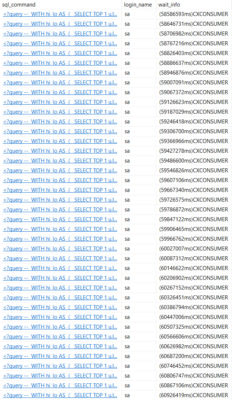

Erik Darling: So usually, when you just suddenly can’t connect and then you restart SQL Server and you suddenly can connect, you’ve hit an issue called THREADPOOL. Tara’s blogged about it. I think everyone’s blogged about it at some point. Don’t feel bad though. You’ve just got to check your wait stats. If you see THREADPOOL creeping up in there, even if it’s like tiny increments, then it’s most likely the problem you’re hitting. It’s usually caused by blocking. It’s usually caused by parallel queries getting blocked because they just take a whole bunch of threads and hang on to them and they get blocked and they hang onto those threads and then, all of a sudden, you’re out of worker threads.

So that’s usually what it is and turning on the remote DAC, enabling that, will allow you to sneak in your little VIP entrance to SQL Server and start figuring out what exactly is causing your THREADPOOL waits. You can run, like, WhoIsActive or BlitzWho or something and off to the races.

Brent Ozar: That’s good.

Tara Kizer: Have any of you guys ever tested Telnetting to the SQL to the SQL Server port when THREADPOOL…

Erik Darling: How old do you think I am?

Brent Ozar: Not when THREADPOOL’s happening though; that’s a great question.

Tara Kizer: I mean, I use Telnet all the time when trying to figure out why I can’t connect to a box, but I just wonder if Telnet would fail when THREADPOOL is happening, because you’re still connecting, just that SQL Server is not allowing you in because the server is out of threads; worker threads.

Brent Ozar: That’s such a cool question. Now, I want to find out but not badly enough that I’m going to go recreate the THREADPOOL waits.

Why is Page Life Expectancy dropping?

Brent Ozar: See, Christian asks, “We have page life expectancy dropping to zero and there doesn’t appear to be a performance dip. I’ve looked for large queries scanning big portions of data along with queries with large memory grants.” Wow, you’re like ahead of – two for two, you’re doing good. He said, “What else should I zero in on?”

Tara Kizer: Look at your jobs. See if there’s anything that lines up with when it drops because there’s lots of things that can plummet the PLE, like index maintenance, update statistics; those two. You could also check the error log to see if the, whatever, the DBCC stuff is happening that has wiped it out.

Brent Ozar: Or, when you said error log too, the other thing you could see, maybe something’s forcing external memory pressure, like something else is driving SQL Server low on RAM. Some other process is doing something in SSIS package.

Erik Darling: CHECKDB will…

Brent Ozar: But if nobody’s complaining too, I would go on with your day. Go find the things people are complaining about, like everyone wearing black in the webcast.

Erik Darling: I’m like, what does PLE drop from? Starting from like 100 to zero, then…

Tara Kizer: Yeah, what number – and do the math on that because if it’s a number that’s not ever reaching past a day’s worth of PLEs and what minutes – see if that number even correlates to how often you’re running some of these jobs. Maybe you’re never getting up to a really high number.

Brent Ozar: Or maybe it’s dropping from 5000 to 4000. Who cares?

Should I leave 2 cores offline for Windows?

Brent Ozar: Dan says, “A client with offline CPU schedulers says that they did this on purpose. They want to keep two cores for the operating system. Help me explain why leaving it this way will cause performance problems.”

Erik Darling: How do they know the operating system is only going to use those two cores? What Windows magic do they have? I’ve never seen anyone be able to say, hey, Windows, you can only use these. But maybe they know something I don’t, which is possible; I’ve just never seen it.”

Brent Ozar: Maybe they have some other app that they’ve hardcoded to only use specific cores, although I smell BS too there.

Erik Darling: Yeah, I’ve run into that a few times…

Richie Rump: No programmer’s going to do that of their own volition. It’s like, oh let me go ahead and do core programming, woo.

Erik Darling: I’ve run into that a few times. One person had a bunch of JRE executables that were, like, part of the app on their server and that’s why they left, like, two to four cores offline. Other people have claimed that it’s for SSRS or IS or whatever. I’m like, you can’t just be like, no you only get these; they use what they want. If they can provide some substantive proof that Windows is only using those cores then word-up.

How do I coordinate jobs across servers?

Brent Ozar: This is an interesting one which Richie might be involved with too. Mark asks, “What’s the best way to coordinate two jobs across servers? We’re trying to do backups on one restore and restores on another. We’d ideally like, as soon as the backup job finishes, for the restore to kick off.”

Tara Kizer: Just add another job step to your backup job and have it connect to the other box. You could do a sqlcmd and do sp_start_job on that restore. So the backup job will kick off the restore, or you could do it in reverse; the restore can monitor the backup job, I guess, and just pull it until it’s done. But I would just add a step to the backups since that’s the sequence.

I have 10,000 heaps. Why is sp_Blitz slow?

Brent Ozar: Next up, Michael says, “There’s a SQL Server 2017 server running in 2016 Hyper-V. I restore to database for testing, sp_Blitz takes eight seconds on the 2012 server but it seems to hang on 2017. Sp_WhoIsActive shows that it’s checking for heaps. The database is all heaps and it’s got about 10,000 tables. Where should I be looking?

Erik Darling: At your heaps, boy. Fix those.

Tara Kizer: Fix the problem. Don’t try to troubleshoot bullets.

Brent Ozar: The hell? I’ve got to read these questions before I ask them out loud. I think all of us would have a problem with 10,000 heaps in a database. On the bright side, you found the problem.

When I add data files, should I add log files too?

Brent Ozar: Mark says, “Afternoon, all. I have to add…” A word about time zones here, it’s 9:20 AM over in California. This time zone thing has me so flummoxed. I’ll be looking at like 2PM, in the afternoon, I’m like, where did all the emails go? Oh, that’s right, most of the country is done for the day.

Richie Rump: And what was the error that I got when I did the deployment today?

Tara Kizer: Time zone…

Richie Rump: Time zone…

Brent Ozar: I hate time zones so much. Mark says, “Good afternoon, all. I have to add additional files to my database as I’m reaching the max size of the partition…” Oh, goodness gracious. “Should I add additional log files as well?”

Erik Darling: No.

Brent Ozar: Why?

Erik Darling: Well, not like you just shouldn’t, at all, ever. Like if you have a very tiny drive and you have a log file that’s starting to get bigger and bigger and starting to outpace that drive, then yeah, you might need to add a second log file until you can get that drive situation remediated. But SQL Server writes to log files serially, so it only writes to one at a time and it will kind of like Ouroboros them. Like, if you had a single log file, it will do that from front to back anyway. It will just do that same thing. It will do that same locomotion serially across a whole bunch of log files. So no you don’t really need extra log files unless, you know, you’re running into some sort of apocalyptic situation with the one you’ve got.

Brent Ozar: I always love – every now and then, you’ll read some extreme edge-case of someone who actually needed like eight log files and when you go in, I’m like, I don’t even know how you found that problem. That’s amazing.

Erik Darling: The only person I’ve heard talk about that ever was Thomas Grosser and it was when he had, like, I want to say 128 1MB log files, each on a specific portion of a specific drive and everything was used circularly in some way that increased throughput on his whatever craze super-dome gambling box by like three billion percent. Like, listening to it, you’re like, wow, it’s amazing you came up with that. And then, it’s just like, man, why didn’t you just get some SSDs?

Brent Ozar: I hope I never have that problem.

How should I get a DBA job?

Brent Ozar: Sri has a tough question. Sri is looking for a new SQL Server DBA job. He says, “Any advice or tips, or what’s the best way to get one?”

Erik Darling: Interview… Apply… No, I don’t know.

Brent Ozar: Cross apply…

Erik Darling: I don’t know, are you, like – what are you doing now? Are you working anywhere near a database now? Are you just, like, tangentially interested in touching a database. Do you, like, program? Are you a JUnit, a sysadmin, helpdesk? Whatever you do now, however close you are to the database now, your next job should just get you a step closer to the database until someone finally allows you to put your arm around the database. Don’t air-hand it, like get in there and hug it.

Brent Ozar: And only use your arm. I would call everybody you’ve worked with in the past, or email, because you know us technology people; we don’t like phone calls. Email everyone you’ve ever worked with in the past and just be, like, hey, I’m doing more database work these days. I want to make the next step. Because they people you’ve already worked with, they know you don’t suck. They know you’re not incompetent. They know you’re easy to get along with. They’ve been out to lunch with you, et cetera.

And if that sentence makes you cringe – if you go, I can’t call anyone I’ve ever worked with before – then it’s a big clue to turn around and start doing things differently at your current job. The people you’re working around are going to be your network for the rest of your life. As I say these words, I am suicidal looking at the other people… Oh my god, I’m doomed. I’m never going to get a good job… But no, like, if any of us know people, that’s the fastest route to get into a new company. If you’re a faceless stranger, it’s really, really hard.

Erik Darling: I don’t know, like, just having put my resume somewhere, like years ago, I still get regular recruiter emails like, hey we have this technology position open, you might want to move six states away…”

Tara Kizer: Those are always funny…

Erik Darling: Become an SSIS expert.

Richie Rump: It’s like, oh, so you did Dozer Basic 6… 20 years ago.

Erik Darling: Like everyone else.

Richie Rump: Maybe go to a SQL Server user group meeting. There’s usually one or two folks popping up, hey I’m looking for this, I’m looking for that, and there’s usually a recruiter hanging around there, lurking around the back, you know. You can notice the recruiter because he’s the only one that’s talking to people. That’s the recruiter.

Brent Ozar: Usually overdressed.

I have a query that’s slow in the app, fast in SSMS…but it’s not that.

Brent Ozar: Pablo says, “My app takes one minute to execute an operation. I captured all kinds of metrics and they say that the T-SQL always finishes in two seconds max with no waits. Where should I go to seek the bottleneck?”

Tara Kizer: Seems like you’re application needs to be checked into. It sounds like the query is completing very fast and the bottleneck’s in the application.

Brent Ozar: I’d also look at the metrics on the app server, like how busy the CPU is, whether it’s swapping to disk too.

Erik Darling: So, like, async network I/O is a good wait stat to keep an eye on if SQL Server is just kind of fire-hosing data at your app and your app is not responding in a timely manner. I saw recently that balance power mode on the CPUs on an app server was cutting app response time by, like, 30% to 50%. So you know, little things that you can check on.

Richie Rump: Run a profiler in your application because you may be getting the data, but you may be doing some processing on that data which is taking a long time. So the profiler will tell you how long each function in each line is taking to execute, so…

Erik Darling: What’s a good profiler to run for that kind of app code?

Richie Rump: It depends on your language.

Erik Darling: Assuming it’s probably c# or something, what would you use?

Richie Rump: I forget the name of it. It’s the one that…

Erik Darling: It wasn’t good then…

Richie Rump: Well I haven’t had a need to run c# profiling in a very long time…

Erik Darling: Richie has a stopwatch…

Richie Rump: Whatever the one JetBrains is ranked – hey, I write fast code, man, I don’t need profilers.

Brent Ozar: I thought he was totally going to go for, well when I fix Brent’s queries that come out of PowerBI, I get out the hourglass…

Richie Rump: Can I tell you, I went in yesterday to say, okay I want to see where the slowness is going on in this server, and the top ten queries slow is, like, Brent’s PowerBI queries, boom, boom, boom. And I’m like…

Brent Ozar: I don’t write lightweight queries. I don’t also write good queries. Then there’s, like, no where clause – give me everything. So I am like the preacher who stands up on the pulpit and goes, don’t order by in the database, order by in the database is a bad idea. And you know what I have to do in my queries? I have to use order by because PowerBI can’t manage to order stuff by default on multiple columns. If you want to sort on three columns, you have to come up with a synthetic column in the application or in the database server and then order by that on the way out and then PowerBI will get it. I had to ask Erik for help in order to – I’m like…

Erik Darling: Can you imagine the level of desperation that comes from asking me? That’s like – unless it’s like, I need help moving, then…

Richie Rump: Brent was so embarrassed, he didn’t ask me; he asked Erik.

Brent Ozar: How do I come up with that row number on multiple columns? I suck so bad at windowing functions, it’s legendary. I’m just – it’s not like I don’t like them, they’re awesome. I just don’t ever get to write new queries.

Richie Rump: I think I had a presentation on windowing functions I probably should throw your way there, maybe. No…

Brent Ozar: Unsubscribe.

Erik Darling: You know, we could also just hit, like, some torrent site and get Tableau Server or something.

Richie Rump: You wouldn’t say that if you’d used it.

Erik Darling: No, probably not. But I would say that if I used SSRS, which is almost PowerBI, so I’m probably going to want to get Tableau.

Brent Ozar: You would say it if you used PowerBI…

Erik Darling: Well I do. I hit refresh on PowerBI and I’m suicidal.

Richie Rump: That’s because it hits refresh on Brent’s queries. That’s why you’re suicidal.

Brent Ozar: The lights go dim at Amazon.

Erik Darling: I just love watching the little thing spin when it’s refreshing, waiting on other queries, refreshing – like 12, this number of rows loaded and it’s just spinning and I’m like, oh…

Richie Rump: We had a huge spike in read IOPS yesterday and I’m like, what is this? What is going on here? Brent, was that you? He was like, no, that was not me, I ran at this time, and I’m like, you do realize this was like two o’clock Eastern? He was like, oh wait, yeah that was me. I’m on the West Coast now.

Brent Ozar: I’m like, oh I still have time before the afternoon rush to go run a bunch of PowerBI queries. Oh no, it’s already afternoon in Miami. It’s probably tomorrow in Miami.

Richie Rump: Yeah, it is. It is. Welcome to the future.

Should I use a DNS alias?

Brent Ozar: Hannah says, “Do you use alias names for SQL Servers? What are the pros and cons of using a CNAME for access to your SQL Server?”

Tara Kizer: No real drawbacks on it. The only thing I could think of is needing to have a relationship with the DNS team so that if you switch servers, you can get that switched over and remembering, on upgrade night, you need to get the DNS team to be ready to make that change, otherwise you’re going to be waking someone up.

Why does my execution plan show a key lookup?

Brent Ozar: Marcy asks, “I was stumped by something that feels like it must have an obvious answer. Why would an execution plan have an index seek on a non-clustered index? Everything that it needs is in the non-clustered index, but it still does a key lookup to the clustered index.”

Tara Kizer: It certainly needs something. Look at the output list of the…

Brent Ozar: Predicate…

Tara Kizer: Yeah, hover over it and see what it’s missing. It’s grabbing something…

Erik Darling: Something’s in there.

Brent Ozar: She says the predicate doesn’t show any columns and the output doesn’t show – she didn’t say the output, but I’m guessing, knowing Marcy, the output’s not in there.

Erik Darling: I was going to say, if you’re able to share the execution plan, stick it on PasteThePlan and I would be happy to take a look at it.

Brent Ozar: The other thing is, if it’s a modification query, if it’s doing an update then that can also get locks on the – I’ve seen that grab the key lookup on the clustered index, but…

I got this strange interview question…

Brent Ozar: Niraj says, “I was asked in an interview, our database went into suspect mode during a restore recovery, how would you fix it?”

Tara Kizer: I would fumble in an interview for something like this because how often does this happen? I mean, it happens so infrequently. I mean, sometimes things go into suspect because you’ve done something horribly bad. But it’s rare that you encounter, especially a production database where you’re having to do recovery. I mean, certainly a test environment, this type of thing might happen, but production, rare.

Brent Ozar: And suspect is – it’s not like it’s restoring…

Tara Kizer: Suspect is – probably you’ve lost the disk behind the database. There’s something really bad happening there.

Erik Darling: That happened to me my first day on my last job. I was sitting there looking – I was like just sitting down. I had just gotten my laptop and I was going…

Tara Kizer: They had just given you access too.

Erik Darling: Exactly, and I had like a week or two worth of alert emails that I had to delete from before I could get on my email account. And so I’m going through those and new ones start coming in, this database is in suspect mode, and I’m like, that’s it. I’m going to get fired on the first day.

Richie Rump: Wow, day one hazing rituals. That is amazing.

Brent Ozar: That would be good.

Erik Darling: It turned out that the SAN guy was like moving like moving a one somewhere and it was expected, but no one told me. I’m sitting there, like, I’m done.

Richie Rump: Why is it always the SAN guy?

Erik Darling: Because they have the most power. They control, like, everything. No matter what you use…

Brent Ozar: [crosstalk] it’s transparent, usually. But then when things break…

Richie Rump: It’s really payroll that has the most power, but that’s okay.

Brent Ozar: Human resources – especially our human resources. The only thing I’d look at – and this is terrible. I know I’m going to get flamed for it by somebody because you can never say anything perfect with suspect, but one thing I would check to see is – often I’ve seen antivirus grab a file, when SQL Server restarts, grab lock on a file and then not let go when SQL Server is trying to start up. Then it’s just a matter of getting access to the file again. That can help. The first thing I’d say too is if the thing goes into suspect mode so often that you’re going to ask me that question during an interview, let’s talk about your storage and your hardware. Is that something you’re going to have me do every day, because I’m not sure I really want this job.

Erik Darling: Fire drill.

How do you manage large amounts of VLFs?

Brent Ozar: Anna asks, “How do y’all manage large amounts of VLFs?”

Tara Kizer: Fix it. You need to fix it. Once you fix it, it shouldn’t happen again. So fix your auto-growths. Change them so it’s not 1MB or some low number. You want it to be a little bigger, but not too big. So don’t set it to some really large number. But if you fix it, it should not happen again on that database. It’s auto-growth, the size of the auto-growth of the log files what’s really important. But to fix the issue, you need to shrink it down to a really small size, grow it back out. But changing the auto-growth needs to happen so this doesn’t continue happening.

Why is SQL ConstantCare warning about too much memory?

Brent Ozar: Daryl says, “SQL ConstantCare is warning me about too much memory. I thought I was doing them a favor. Can I just push the memory back down? These folks build cubes and I thought more memory would help.” Well the way that that query is working internally is it’s checking to see that if your buffer pool had stuff in it and is now empty. Typically, what this is driven by is either someone ran a query with a large memory grant – and you mentioned building a cube, which can totally do it; select star form table with no where clause, giant order by.

That query may need a giant memory grant in order to run. And then it turns around and after SQL Server frees all that memory to go run that query, if you run sp_BlitzCache with the sort order of memory grant, you’ll see the queries that have been getting large grants. That’s what I would go through and look at troubleshooting. Do you have queries that say you’ve got a box with 256GB of RAM? Queries are getting 60GB of RAM every time they run; that’s when you start going to tune that thing.

Anything to look out for with 2016 upgrades?

Brent Ozar: Steven asks, “I would like to know your point of view on upgrading SQL Server from 2012 to 2016. Are there any considerations I should look out for or risks?”

Erik Darling: Yeah, 2018.

Tara Kizer: I don’t think I would bother with 2016. I would go with 2017 if 18 wasn’t out.

Erik Darling: Yeah, it’s not like getting a deal on a used car. It’s not like, oh, I’m going to get the 2016 model because it’s cheaper. Go to 2017.

Tara Kizer: You know what – the county of San Diego has – that’s where I started my IT career – they have a policy – and they’re not the ones that run the IT, they outsource that – but the county of San Diego, the government, has a policy that you can never go beyond the current version. They always have to be one version back. And it’s because of running Microsoft products all these years and running into major operating system issues, and so they have this policy. And now the outsource company, the IT people, they can never be on current technologies. So it’s a bad policy.

Richie Rump: What about an in-place upgrade? Should they do that?

Tara Kizer: Yeah, sure, why not…

Erik Darling: While they’re doing dumb crap, they might as well just do it. Just make the most of this. Explore the space.

Brent Ozar: Keeping out for the cardinality estimator too, there’s this cardinality estimator that’s impacted when you change the compatibility level on a database. But I’m a huge fan of the Microsoft SQL Server upgrade guides. They’ve published huge upgrade guides. You don’t read the whole thing. No one has time for that. what you do is look at the table of contents and you’ll learn a ton of stuff just by looking at the table of contents, the stuff they warn you about.

Erik Darling: Pay special attention to, like, breaking…

Brent Ozar: Yeah, small note.

Where can I learn more about index usage statistics?

Brent Ozar: Anika asks, “Is there a good explanation online…” No… “About the metrics that Management Studio shows under index usage statistics; for example, range scans and singleton lookups. I’m trying to figure out what’s going on, specifically index operational statistics.”

Tara Kizer: Well we have to wonder why you’re using Management Studio’s index stuff. Use our sp_BlitzIndex. It will become more clear.

Richie Rump: Yeah, big time.

Tara Kizer: I don’t ever look at that stuff in Management Studio. Index usage – I don’t even know how to get to that screen.

Erik Darling: Things you forget. Reports, maybe?

Brent Ozar: Yeah, and the DMVs are actually really good in terms of – the Books Online documentation on DMVs is really good. I just don’t think any of us pay attention to the specifics inside those range scans and singleton lookups because a range scan can be good or bad. Singleton lookups can be good or bad; that’s okay. I just want to know that the index is getting used.

Richie Rump: A lot of times, I want to target that range scan. I mean, I want to hit that because that’s where I want to go. I write better queries than you, Brent. Just remember that.

Brent Ozar: Look, I need select star for all the singleton lookups. I need to do range scans.

Richie Rump: Predicates? I’ve never heard of her.

Brent Ozar: Predadate, what? Anika follows up with, “Can I use sp_BlitzIndex in production? We don’t really have dev; don’t ask.” So if I had to pick the level of risk between running sp_BlitzIndex in production versus the level of risk of not having a development environment, guess which one I’m more concerned about. Take a wild hairy guess.

Erik Darling: But you know, to answer the question a little bit, we run sp_BlitzIndex in other people’s prod all the time, so we’re pretty cool with it. If it does anything weird on your server, let us know. We have GitHub for that. that’s our insurance policy. You can let us know.

Have the police got Tara surrounded?

Brent Ozar: Ron asks, “Is there a police department copter over you Tara? I hear them broadcasting over a speaker.

Tara Kizer: What?

Erik Darling: Creep.

Tara Kizer: No…

Erik Darling: Stop triangulating Tara, monster.

Tara Kizer: Ron’s in East County too. I did hear, on Monday, I head helicopters outside and then I’ve just seen, in one of my chrome windows, a notification of a fire in a San Diego that broke out. So I’m on the call with my client and it’s like, you know, I’m in California and it is very, very active in fire season. I’m going to look out the window real quick just to make sure…

Richie Rump: Make sure the fire isn’t coming towards us; we’re good.

Tara Kizer: It was the one that started in Ramona. I think it was on Monday, but I think that one’s under control.

Brent Ozar: There have been a lot of fires this year; a lot.

Is compat level 2008 a problem?

Brent Ozar: John asks, “I have SQL Server 2016 with databases in compat level 2008. Is that limiting SQL Server?”

Tara Kizer: You’re not getting some of the T-SQL features. I mean, it just depends what you need.

Brent Ozar: Not getting some of the new cardinality estimator stuff.

Tara Kizer: A lot of people don’t want that new guy though.

Brent Ozar: Backfires…

Erik Darling: Will that mess with the windowing function and the over-clauses that were added in 2012?

Tara Kizer: Yeah.

Erik Darling: So as far as I’m concerned, you can pretty safely bump up from, like, 2008 or 2008 R2 to 2012 without sweating too much about what’s going on there. Obviously the later bump ups can cause some bumps in the night, but 2012 would probably be my bare minimum right now, just because, in case anyone doesn’t know, 2008 and 2008 R2 are no longer supported in, like, a year.

Brent Ozar: It’s coming fast. It’s going to come really fast because, you know of course, the time you start having that discussion with management about this isn’t supported next year, it’s not like they’re going to go, oh well go ahead and install 2017. Go ahead, you can do that this weekend…

Erik Darling: We just got this new server in; crazy you mention it. We got all the paperwork ready and the budget was there. It was amazing. The stars aligned.

Brent Ozar: I had a sales call a while back and somebody has had the hardware sitting in the data center for over a year. They’re like, oh, it’s ready to go for the new SQL Server. I’m like, man, by the time you put it in now at this point – this isn’t normal. Why do you leave it on the palette?

Erik Darling: We had a client-client, not just a sales call, we had a client-client who had a brand new 2016 box sitting around forever and ever and they didn’t do anything with it. They didn’t move anything over to it until they found corruption on their 2008 box. That was the impetus to start moving stuff. Like, oh wait, this one’s screwed. They were like pushed out, forced out, in order to get off that hardware.

Brent Ozar: We should get all our listeners together and do like a potluck hardware kind of thing so that the people with the extra hardware could give Anika their development server.

Richie Rump: See, I tried to do that but then Brent made me turn down the hardware in RDS, so…

Brent Ozar: Yeah, it’s true. Richie went in armed for bear.

Erik Darling: If anyone wants to buy my old, or buy my current desktop, I will sell it to you autographed for like twice as much as I paid for it.

Richie Rump: Does that come with a warranty on it, since you built it yourself?

Erik Darling: Yeah, the warranty is whatever the postal service will cover for insurance.

Brent Ozar: It comes with tire treads on it.

Erik Darling: If I can send this thing medium mail, so…

Brent Ozar: Well that does it for this week’s Office Hours. Thanks, everybody, for hanging out with us and we will see y’all next week. Later, everybody.

![]()