This week, Richie and Tara discuss Richie’s current projects, Availability Groups, offloading reads and backups, applying service packs, whether production should ship agent jobs to production recovery server, security checks and scans in SQL Server, CPU performance issues upgrading to SQL Server 2016 and 2017, database backups and restores, whether there’s currently a DBA shortage, and Richie’s favorite board game.

Here’s the video on YouTube:

You can register to attend next week’s Office Hours, or subscribe to our podcast to listen on the go.

If you prefer to listen to the audio:

Enjoy the Podcast?

Don’t miss an episode, subscribe via iTunes, Stitcher or RSS.

Leave us a review in iTunes

Office Hours – 11-8-17

Do we see more on-premises or cloud servers?

Tara Kizer: Michael has a question, “Currently the SQL Server related projects you’re working on – SQL Server on-premise or SQK Server cloud based implementations…” What are you doing, Richie, for all the projects that you’re working on? Because we don’t really work on projects, Erik and I, we’re just…

Richie Rump: Yeah, so I’m kind of purely cloud-based now, so doing a lot of work in Postgres in the cloud. Not SQL Server, oddly enough, doing a bunch with some of the other NoSQL databases in the cloud as well, but mainly dealing with the NoSQL side of things with Lambda and all that. So I haven’t played much with Azure over the past six months or so, it’s all been AWS and getting stuff up running in there. So mainly the stack that we’re using for Paste the Plan is mainly the stack that I’m messing with, and c# with .NET locally. So a lot of fun stuff, but no, I haven’t been doing any SQL Server in the cloud. I probably should.

Tara Kizer: I’d say probably half of our clients, maybe, at least my clients are in the cloud, maybe a little bit less than half. And of those, most of them are using AWS EC2 specifically. I don’t think I’ve had too many Azure clients. I haven’t had any Google Cloud clients yet. Common theme for the cloud clients – all of them are experiencing I/O issues. Slow I/O is the theme on those. That’s not always the major culprit, but definitely slow I/O on those servers…

Richie Rump: I think that’s a big problem with the cloud, period, just slow I/O.

Tara Kizer: Yeah, I mean companies are looking to save money, they’re like, “Look at this cheap disk that we could use for our implementation here.” You know, you don’t get very many IOPs out of that.

Richie Rump: You should tell the managers, every time you see cheap, replace that with slow, and then reread the sentence and tell me what you think.

Is there a lot of age prejudice against DBAs?

Tara Kizer: Alright, Thomas asks, “Do you guys interview people for companies, and if so, do you see any of the prejudice towards older workers in database work?” We do offer that as a service. Brent is the one that has been handling that. I don’t know how you request it but look on our website and contact us if you’d like us to do that. I don’t know that I’m seeing any prejudice towards older workers, I don’t know. Brent’s not here to answer that question. He’s the one that tackles this. It’s definitely a service that we offer. A lot of our clients don’t have DBAs, they don’t have SQL Server knowledgeable people, or they don’t even have much of an IT staff. Some of these companies only have like two people for their entire IT department; so definitely if you guys are looking to hire a SQL Server developer or DBA and you don’t have the knowledge to be able to interview people, we do offer that as a service.

Richie Rump: Yeah, but I’ve read some articles about how that’s a problem, especially in Silicon Valley. Not only that, but diversity hires as well. So it’s interesting, and on the West Coast, it’s more of a problem. I haven’t run into it, specifically not in the database area, I haven’t really seen much of that at all. Typically I think your DBAs will tend to be a bit older because you don’t really go out of college knowing how to be a DBA. You know how to do other stuff and then you somehow fall into that DBA role.

(Brent says: nah, I’m not seeing any age discrimination at clients because DBA is one of those positions that really rewards (or requires) experience.)

Do you recommend Availability Groups for high availability?

Tara Kizer: Let’s see – Kush asks, “If there are no requirements for offload read, backups, et cetera, would you still recommend Availability Groups for high availability?” Well, since I’m the one on the call, I love Availability Groups. So yes, I implement it, as a production DBA, for everything. I know how to use it, I know how to configure it, I know what the issues are where it has caused production outages that I have experienced. I don’t need to offload reads or backups, yeah definitely.

It’s still a great HA and DR solution, offloading reads that pertain to reporting, offloading backups. I don’t even like offloading backups. I don’t really see the need to offload backups. Just how much load are backups adding to your primary replica that’s your having to offload that task? There is latency on the secondary replicas, even on a synchronous commit secondary, there’s latency there. So I want my backups to be up to date to avoid as much data loss as possible.

Richie Rump: I wouldn’t recommend that at all, because I don’t know it at all. So I’d just say go to the cloud, SQL Server in the cloud, and guess what, that has all the backups for you, all the replication stuff – Aurora does all that – you could do all that stuff pretty easily in just a couple of clicks and, “Oh look, cluster, woo, fun…”

Tara Kizer: Yeah, and if you’re looking for just simpler HA, think about database mirroring. It still exists; yes, it’s been deprecated since SQL Server 2012, but it still exists in 2016 and I imagine 2017 haven’t touched it yet. You know, use synchronous database mirroring and then have a witness, and that witness can be a SQL Server Express Edition, it could be this little tiny virtual machine with hardly any resources. You see the third resource out there – if you want to configure automatic failovers that is – if you don’t care about that, then just the two servers is fine. But synchronous database mirroring is a great HA solution. It’s easier to implement and doesn’t have as much issues as availability groups do. It’s not complicated at all. Failover cluster instances are a bit complicated. Once you know it, it’s not so bad, but you have to have knowledge about clustering to implement it; it’s the same thing with availability groups.

Richie Rump: You know what I find funny is that our website has a failover backup. I’m like, “Oh how did it get so big?”

Tara Kizer: Yeah, it was crazy, for our website, reading Brent’s blog post – I think it’s on his Ozar.me website – talking about the strategy that he has for Black Friday, what he has to think about to make sure that it scales for Black Friday and the issues he encountered last year because it was down for a little while because it ran into issues.

Richie Rump: I think that post was fascinating because he said, you know, we’re going to be doing this and I had to be notified, the website had to be down for a certain period of time for them to build up the new cluster, and then Brent told me the price and I’m like, “Okay, I guess, you know…”

Tara Kizer: It’s shocking, the price…

Richie Rump: Yeah, you know, it’s like $6000 a month, and here I’m working on serverless products, that you know, “Hey, ten bucks man, there you go, there’s all the compute time that you need for the next month.” But it makes total sense that we want it to be rock solid so that people, when they all come rushing in, that we can handle that load.

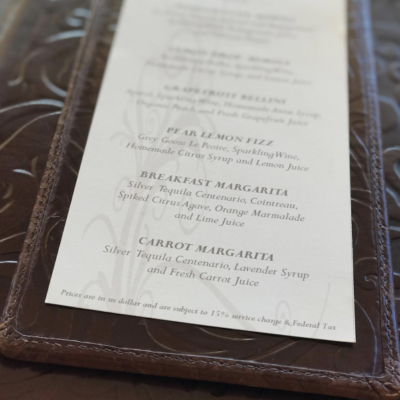

What precautions should we take before patching?

Tara Kizer: Alright, a question from Shree: “Precautions to take before we apply service packs.” So I always recommend applying service packs in your test environments first. You burn time in test environments and then when you decide to do it to production, obviously you’re in a maintenance window, make sure you have solid backups. I don’t necessarily ever kick off the full backup job before applying a service pack, I just know that my full backup runs at night and I’ve got my transaction logs all day and we restore those in other environments.

I know that my backups are good – if you don’t know that your backups are good, maybe you should kick off a full backup. I’ve never had an issue with installing a service pack where it required me to do a complete restore reinstall, things like that. But that really is the only precaution, besides making sure you run it in a test environment.

What performance issues pop up in the cloud?

Tara Kizer: Alright, Michael asks, “I think Tara hinted at the answer, but what are the root cause issues regarding SQL Server performance in the cloud? As far as I/O issues, the root cause is that, you know, you’re not buying the disks that have tee I/O performance that your server needs. You’re seeing severe I/O slowness because you haven’t spent enough money on the disks that have been allocated to your box.

You have to be very careful, when you’re selecting your instance types, that you’re able to go up in IOPs if needed, because there’s some instance types where it maxes out at a certain number, and that’s it. I know in AWS you can start striping disks, but it gets complicated. So I think that the ceiling on EC2 or a certain type – it’s either 1000 IOPS or 3000. I can’t remember what it is, and if you need more than that, you’ve got to start doing more complicated things; striping a disk or picking a different instance type that allows you to select disk with higher IOPS.

Richie Rump: Yeah, I think the other part of that, especially talking about SQL Server in the cloud on Azure side, it’s really index maintenance too. So they just announced this week that it’s going to, SQL Azure, it’s going to be auto-tuning, and that’s going to be the default now for all new instances. And that makes sense because people throw their stuff in the cloud and a lot of them probably don’t even know how to tune their own database. So it’s going to be auto-tuning there, and it would help out probably about 80% of folks. Now the other 20, it’d be like, “Well why is this index there,” and blah, blah, blah, but you could turn that on and turn it off and there you go. But as usual, index, when you’re calling a query, that’s probably something you need to look at first.

Tara Kizer: Yeah, and look at your wait stats if you’re able to. I don’t know if you can on some of these instance types. Maybe if you do Amazon RDS, maybe, I don’t know – but look at your wait stats and see what SQL Server is waiting on, and if it’s going to be disk, you’re going to see specific wait stats. What is your top wait stat? CXPACKET? That doesn’t really pertain to I/O, so maybe you are experiencing severe I/O slowness, but SQL Server is not having to go to the disk very often, is it really impacting you? You’ve got to know your wait stats as well.

I’m getting this error number…

Tara Kizer: Gordon asks, “Getting an error with availability groups…” Blah, blah, blah, I don’t know. I don’t have error numbers memorized. I would suggest posting your question over at Stack Exchange and asking over there, and provide some more detail so people can help you out there.

Richie Rump: Gordon, I’m sorry, I thought she would know that error right off the top of her head, so you and me are in the same boat, buddy.

Tara Kizer: Yeah, and I suspect there’s more to that error as well, that failed to join local availability group replica to AG – I need more info. Maybe it’s not at the right LSN yet.

Do I need AG knowledge before deploying AGs?

Tara Kizer Kush follows up with his, or her, I’m not sure, availability group question from earlier, “Is the recommendation based on high availability group knowledge?” Yes, definitely, but that doesn’t mean that if you don’t have any knowledge, you can’t go ahead and deploy it. You’ve got to get the knowledge, though. And the way I learned was we installed it in a test environment, QA environment, load test environment. We thoroughly tested availability groups and then went to production many months later.

Kush prefers failover cluster instances over availability groups. Brent and Erik would agree with you. My experience is that I like availability groups easier because it solves so many issues, and failover cluster instances, I have to add another feature to get DR. FCIs provide HA, but they’re not providing DR for me.

Richie Rump: Yeah, do we have an availability groups training course coming soon?

Tara Kizer: Yes, I’m not sure – I know there’s one in December from Edwin, so yes, that was really popular the first time that he did it, and Brent was the person helping out on the call in case any issues happened. I watched a bit of it; it’s really good material.

Richie Rump: And our Black Friday sales are coming up soon for that, so if you want to jump in on that, stay up late.

Tara Kizer: Yeah, and we’ve currently got great sales on the mastering series that Brent has, and I’m actually really excited about that because it’s all hands on and I learn by doing. Most of that is going to be in production, but get your own virtual machine, so hands on there.

Richie Rump: Yeah, I fail by doing, so that’s the way that works. Oh, that doesn’t work? Oh okay, move on.

I’ve got this Analysis Services problem…

Tara Kizer: Okay, Vlad asks something about analysis services, linked dimensions… I don’t know, I’m not a data warehouse person. I’ve actually never even touched analysis services, so I can’t even go there. Richie, have you done analysis services?

Richie Rump: I have, but very minimal, to the point where I could get something up and running and that’s it. I would go over to DBA.StackExchange.com and ask the question there. Yeah, I can’t answer that question with any sort of authority whatsoever.

Tara Kizer: Yeah, at my past companies we haven’t used analysis services. We’ve had data warehouses, but it’s always been like Cognos, Informatica, these other non-Microsoft products.

Richie Rump: I’ve used all of that…

Tara Kizer: Yep, and the thing to know about Cognos and Informatica, certain versions of that don’t really work well with availability groups where you need multi-subnet configuration and readable secondaries.

Do I need to pause replication when patching?

Tara Kizer: Alright, Shree asks, “Also, if you have replication enabled, you just stop replication agent job and apply patches.” I actually don’t even do that for patching. I just install as is, services are going to be stopped and all the patches will be applied and all the restarting of services. I don’t stop replication, I don’t stop anything as far as patching goes. The only thing that we stop is the application access, you know, shutting down the application so that they don’t start gaining – you said that there’s graceful shutdowns, and this is a planned maintenance window. So I don’t stop agent jobs, replication, anything.

Where’s everybody at?

Tara Kizer: Michael says, “Thanks for doing office Hours, very much appreciated.” Yeah, we’re out of questions here if anyone wants to get any last-minute questions in here, otherwise, we’re going to end the call early. Sorry, it’s just Richie and I to field your questions today. Brent is enjoying Cabo. He’s in vacation mode and Erik is too. I think he’s in San Francisco, something like that.

Richie Rump: So, he’s in Cabo. I didn’t know that that’s where he went. I knew he went to Mexico, I didn’t know where in Mexico. See, that’s the extent of the questions that I ask.

(Brent says: The Resort at Pedregal. Erika got a really good Black Friday deal last year. That’s basically how our money handling works: we run Black Friday sales, and we take that money, and we…blow it on vacations. You get training and pictures of Mexico. Everybody wins.)

Tara Kizer: I only know because we’re Facebook friends and I look at Facebook and I saw his pictures and stuff.

Richie Rump: I just skim – oh Brent, alright… Actually, my data warehouse platform of choice is actually Teradata. I got some training in that and got understanding how that thing works, and I was able to get like five billion rows into Teradata and actually do some pretty cool stuff up against it. So I actually probably know a little bit more Teradata stuff than I do the SSAS stuff; which is probably pretty embarrassing for a Microsoft guy, but I’m in AWS all day long so I guess I’m not that much of a Microsoft guy in the first place.

Tara Kizer: Wasn’t there a link that you shared that someone was saying that Oracle and Teradata are Legacy products?

Richie Rump: Yeah, and I just want to keep scrolling and say, “What are you selling?” And that’s what it was. It was someone who was, you know, “We will help you get to the cloud and off your Legacy stuff.” And it’s like, “Yeah, Teradata and Oracle aren’t Legacy stuff.” You know, the people who need that kind of performance and power, they’re not going to the cloud; not yet, for the afore mentioned speed and the size of the data and all that stuff. They’re not going up there yet.

What’s Kendra up to these days?

Tara Kizer: Alright, Michael says, “For those interested in an Ozar alumni, Kendra Little, one of the founders of the company, she has a website, SQLWorkBooks.com. Great hands-on info regarding query tuning and much more.” Definitely, there’s a lot of good stuff there, free stuff, and she’s building up all of her training material over at SQLWorkBooks.com. And if you’re a blog reader, which hopefully you are, LittleKendra.com is where she blogs.

Richie Rump: Yeah, and she’s on the $1 denomination [crosstalk]…

Tara Kizer: No, I’m the $1. Brent is too. Is he going to send that stuff to us? I hope so…

Richie Rump: He is, I’m sure, once he gets back from Mexico we’ll get all that stuff. But she is on a query buck, one of them, maybe five. I know I’m 20; I’m on the 20.

Tara Kizer: My client this week, they sent one of their people to Erik and Brent’s pre-con, so they had the query bucks, but they didn’t receive all the denominations. They had mine so they showed that to me on the video, but they didn’t have them all. Not sure how that worked at the pre-con…

Richie Rump: So just for people who are confused, we do have like actual printed out query bucks with all these denominations on it and different people are on the denomination. I think Paul White’s on the 100, as he probably should be.

Should DR servers have the same Agent jobs?

Tara Kizer: Alright, Greg asks, “Should production ship agent jobs to the disaster recovery server?” Yes. So if you’ve got HADR and if you’re using an availability group where even your HA replica – it is another SQL Server instance. Failover cluster instances, you don’t have to worry about another server because it’s just one instance, but availability groups, log shipping, database mirroring, those are all another SQL Server instance that needs the jobs, it needs the logins, it needs all that stuff that is not being, quote en quote, mirrored to that other server.

Even transactional replication, if you’re using that as an HA or DR solution, which I do not agree with, but if you are, all those things require someone to set up those external objects. When I say external: external from the user database, things that are storing Master, MSDB, such as logins and jobs; those are the two most common things that you need to keep in sync, making sure that those always get updated on the other server. And I used to do this manually. There are probably tools out there that can help you script that stuff out, but definitely need to keep those other servers in sync too if you ever have to do a failover to that other server.

Especially on an automatic failover synchronous commit availability group replica, because if it automatically fails over at two in the morning and you’re missing some critical jobs that you have in place, make sure that those are over there as well.

Have you worked with Epic data warehouses?

Richie Rump: Yeah, so Wes Crocket has a question, “Have you guys ever worked with Epic Data Warehouse or reporting environments?” I can say no; I have successfully avoided Epic in my career, so there.

Tara Kizer: I haven’t either. I haven’t really touched anything data warehouse. I mean, the company has had data warehousing solutions, and so I had to get access to the systems. In Informatica, we had to set up a transactional replication publication with no subscriber, because Informatica connects directly to the distribution database; that was crazy and it certainly created some blocking issues for us. But yeah, I haven’t really touched much of data warehouse.

A lot of client’s servers that I’ve looked at – and I shouldn’t say a lot, less than 10% of my clients, I’ve been looking at a data warehouse server, but not when it comes to analysis services and the actual data warehouse product. I’m looking at the SQL Server instance.

How do you do security scans in SQL Server?

Tara Kizer: Okay, Shree asks, “How do you do security checks and scans in SQL Server?” I don’t, that’s how I do it. Ask someone else. I’ve certainly been at companies where we’ve had security audits, and they require some pretty strict things and we would just say, “Not going to do that, not going to do that.” We don’t specialize in security here, so I’m not comfortable talking about that topic.

Richie Rump: Yeah, I think Denny Cherry has a book on SQL Server security that we recommend you check out.

Tara Kizer: Alright, Shree is asking, “sp_Blitz or any other tools?” No, that’s not going to help you out – none of the Blitz stuff is going to help you out with another – oh it’s asking about security. Sorry, these things aren’t linked together in the questions panel. No, not going to help you there.

Richie Rump: No, and I’m not going to write that either, so… You’re welcome to contribute, sp_BlitzSecurity.

Tara Kizer: I think some people have actually asked for that, or thought about adding that to the blitz stuff and Brent said no, let’s keep that out. I’m not positive on that though.

Richie Rump: yeah, it seems like a completely different script and a script that I want nothing to do with because I don’t want to do anything with that, not what I want to do, sorry folks.

(Brent says: exactly, we just don’t specialize in security. You don’t want amateurs doing your security, not in this day and age. You wouldn’t wanna hire a security team to fix a performance problem, either – it’s important to understand the strengths of who you’re hiring. If someone claims to be great at everything, then they’re probably not even good at anything. They don’t even know what they don’t know.)

Should we skip SQL Server 2016?

Tara Kizer: Adam asks, “My company started upgrading to 2016 but halted due to experiencing increased CPU performance. Now SQL 2017 is being touted as being even better performing. Should we consider going straight to 2017 and scrap the 2016 upgrade?” Well, you need to look into the new cardinality estimator. I’m assuming that you’re on 2012 or lower and I think maybe what you’re experiencing with the increased CPU utilization on 2016 is performance issues due to the new cardinality estimator that was introduced in 2014. So I would advise that you look into that.

There are things that you can do to get the old cardinality estimator. I don’t advise changing the compatibility level to be lower or adding the system startup trace flag. You need to figure out what queries are having issues, find the CPU culprits – and you can use sp_BlitzCache with order CPU and order average CPU. Use those to determine your CPU offenders, and then figure out what’s wrong with them. Do those need to use the old cardinality estimators? Because you can add the query trace on trace flag to individual queries, so that’s what we recommend. We don’t recommend changing the cardinality estimator at the instance level, be it compatibility level or the trace flag at the instance level. Instead, find the culprit queries and see if downgrading the cardinality estimator on those specific ones is helpful.

I don’t think that upgrading to 2017 versus 2016 is going to fix this. You need to figure out what’s happening here, and I suspect just based on experience with clients and reading blog articles out there, a lot of people are having issues with the new cardinality estimator.

How can I restore multi-terabyte databases faster?

Tara Kizer: Alright, Dee asks, “We have large databases, 1TB on one primary file. They are all simple recovery model. They take a long time to backup even with compression. How hard would they be to restore if broken into multiple files? Very limited staff and no DR…” For very large databases, I still think that you should do SQL Server backups, but you may want to look into snapshotting technologies that can backup a 20TB database in just – snapshots, it can do it in a second. So SAN snapshot technologies and making sure that those snapshots are – I forget what it’s called, but they are using the VSS thing, and so they are valid SQL Server backups. And you can copy over massive databases to another server or test server in just a few minutes via snapshotting technologies.

As far as breaking it up into multiple files, I don’t know that that’s going to help. Breaking your backups into multiple files might help. One of the tests that I did when we did the Dell DBA days last year in Austin was to test backup files, and I think it was that four backup files was the best number to have for backup performance. going higher wasn’t very helpful and going lower wasn’t very helpful, but four was like the sweet spot. So take a look at that. But for large databases, for the full backups, I would look at other technologies to help you out with that.

What database podcasts do you listen to?

Tara Kizer: Alright, let’s see, Chris asks, “Do you have any good tech database podcasts that you regularly listen to?” Richie can probably answer that.

Richie Rump: Actually, I’m more of a content creator than I am a consumer, and now that I don’t travel anymore, my podcast listenage has just gone straight down the tubes. But yes, I do know a bunch of podcasts. One is mine, Away From The Keyboard, where we interview technologists but don’t talk about technology. We just kind of get behind the person and the technology and really talk more about who they are and what they’ve done in their career. I know that SQL Server Radio, I think that’s Matan Yungman’s podcast. They’re out in Israel, they do a really good job there. SQL Down Under – I don’t know how frequently that is being produced, but I remember listening to that a lot. SQL Compañeros, that’s another one that’s out there as well. Am I missing one? I’m pretty sure I’m missing more than one.

But Kendra has one that’s considered a podcast, but it’s really a videocast, she’s got hers as well. So yeah, there’s a bunch of them that are out there. Just do a Google search on SQL Server podcasts and there will be a bunch of them that come up, and probably some that I mentioned. Check them out, see which ones you like. Listen to them all, listen to one of them, don’t listen to any of them. Listen to mine though, that’s the one that really matters.

Is there a DBA shortage?

Tara Kizer: Dorian asks, “I saw an article about there being a DBA shortage. Are you seeing any of that?” I’m interested in seeing how the job market is doing, so I look at all the LinkedIn emails and all the recruiter emails and definitely in the market that I’m in, San Diego, California, SQL Server DBA jobs are remaining open for a very long time. We’re talking like weeks upon weeks upon weeks. Definitely a DBA shortage here.

Maybe companies need to start investing in the employees that they have and getting them trained to be able to do the senior level work, because that’s what all the job postings I’m seeing are for, senior DBAs, not seen intermediate or juniors popping up. So the shortage is on the senior side. Maybe getting someone that might be interested on the developer side, because some developers are interested in switching over to DBAs; not a lot usually. It’s usually coming from the sys admin side, the Windows administrators, that are often wanting to jump over to the DBA side. Invest in them, invest internally and get those people up to senior DBA level.

Does SQL Server have dynamic partitioning like Oracle?

Tara Kizer: Sreejith asks, “Anything close to integral partition from Oracle or dynamic partition feature in any of the newer versions of SQL Server 2016/17?” I don’t even know what those features do, so I can’t tell you if there’s an equivalent in SQL Server or not. If Erik were here, he might be able to help.

Richie Rump: Erik would be able to know that form the Oracle side. But I haven’t used Oracle since my second job out of college, which was twen… years ago, so yeah. I’m behind on my features just a little bit.

Is San Diego a nice place to live?

Tara Kizer: Ben asks, “Is San Diego a nice place to live?” It sure is. That’s why our mortgages are so high and rent is so high. In my area, you can’t even get a two bedroom apartment for less than $2200 per month. And I live in just middle-class America here, this is nothing fancy whatsoever. Definitely not a bad area, it’s just regular old middle-class people. It did rain yesterday.

Richie Rump: Yeah, but your baseball team is terrible. I mean really, I mean…

Tara Kizer: We don’t have a football team…

Richie Rump: yeah, your football team went up and left for no reason. It’s like, I don’t know man, you can’t have baseball and football.

Tara Kizer: Now that the Chargers are with the trader Las… I’m not a sports person. I grew up on sports, heavily sports family, but sports is not my thing to watch. Anyway, everybody’s into the San Diego State Aztec football team now because they’re really good, so they’ve got a lot of attendance at their local football games.

Richie Rump: Yeah, but are they 8-0? No they’re not…

Tara Kizer: I don’t know, I don’t follow…

Richie Rump: My school is, that’s right, class of 97, University of Miami. I’m not saying we’re back, but if we win today, if we win this weekend then we’re back.

Why do you wear a winter coat in San Diego?

Tara Kizer: One last comment from Thomas, “You live in San Diego and wear a winter coat inside?” Yes, I am wearing a down puffy jacket, and this thing’s pretty significant. I’m actually getting kind of warm here. But it’s chilly here in the mornings and the sun doesn’t hit my house until the afternoon, so where my desk is, it stays cool all day long. I usually don’t have to run the air conditioning until the afternoon, even when it’s 100 degrees outside.

Richie Rump: Yeah, I totally would typically wear a hoodie, except I’ve got the lights on me right now, so I’ll probably put it on after I bring the lights down a bit. But yeah, us warm weather people are really crazy because it gets below like 78 and we’re like, “Jacket time, suckers. I’m not getting cool for this, no.”

Tara Kizer: Alright, Greg says, “22 above and I’m in Minnesota.”

Richie Rump: You enjoy that. Yeah, my refrigerator’s not even 22, you know.

Tara Kizer: Alright guys, that’s the end of this call, we’ll see you next week.

Registration is open now for our new 2018 class lineup.

![]()

You’ll go back to the office with free scripts, great ideas, and even a plan to convince the business to upgrade to SQL Server 2016 or 2017 ASAP.

You’ll go back to the office with free scripts, great ideas, and even a plan to convince the business to upgrade to SQL Server 2016 or 2017 ASAP.