You read a lot of advice that says you shouldn’t shrink databases, but…why?

To demonstrate, I’m going to:

- Create a database

- Create a ~1GB table with 5M rows in it

- Put a clustered index on it, and check its fragmentation

- Look at my database’s empty space

- Shrink the database to get rid of the empty space

- And then see what happens next.

Here’s steps 1-3:

CREATE DATABASE WorldOfHurt;

GO

USE WorldOfHurt;

GO

ALTER DATABASE [WorldOfHurt] SET RECOVERY SIMPLE WITH NO_WAIT

GO

/* Create a ~1GB table with 5 million rows */

SELECT TOP 5000000 o.object_id, m.*

INTO dbo.Messages

FROM sys.messages m

CROSS JOIN sys.all_objects o;

GO

CREATE UNIQUE CLUSTERED INDEX IX ON dbo.Messages(object_id, message_id, language_id);

GO

SELECT * FROM sys.dm_db_index_physical_stats

(DB_ID(N'WorldOfHurt'), OBJECT_ID(N'dbo.Messages'), NULL, NULL , 'DETAILED');

GOAnd here’s the output – I’ve taken the liberty of rearranging the output columns a little to make the screenshot easier to read on the web:

Near zero fragmentation – that’s good

We have 0.01% fragmentation, and 99.7% of each 8KB page is packed in solid with data. That’s great! When we scan this table, the pages will be in order, and chock full of tasty data, making for a quick scan.

Now, how much empty space do we have?

Let’s use this DBA.StackExchange.com query to check database empty space:

USE [database name]

GO

SELECT

[TYPE] = A.TYPE_DESC

,[FILE_Name] = A.name

,[FILEGROUP_NAME] = fg.name

,[File_Location] = A.PHYSICAL_NAME

,[FILESIZE_MB] = CONVERT(DECIMAL(10,2),A.SIZE/128.0)

,[USEDSPACE_MB] = CONVERT(DECIMAL(10,2),A.SIZE/128.0 - ((SIZE/128.0) - CAST(FILEPROPERTY(A.NAME, 'SPACEUSED') AS INT)/128.0))

,[FREESPACE_MB] = CONVERT(DECIMAL(10,2),A.SIZE/128.0 - CAST(FILEPROPERTY(A.NAME, 'SPACEUSED') AS INT)/128.0)

,[FREESPACE_%] = CONVERT(DECIMAL(10,2),((A.SIZE/128.0 - CAST(FILEPROPERTY(A.NAME, 'SPACEUSED') AS INT)/128.0)/(A.SIZE/128.0))*100)

,[AutoGrow] = 'By ' + CASE is_percent_growth WHEN 0 THEN CAST(growth/128 AS VARCHAR(10)) + ' MB -'

WHEN 1 THEN CAST(growth AS VARCHAR(10)) + '% -' ELSE '' END

+ CASE max_size WHEN 0 THEN 'DISABLED' WHEN -1 THEN ' Unrestricted'

ELSE ' Restricted to ' + CAST(max_size/(128*1024) AS VARCHAR(10)) + ' GB' END

+ CASE is_percent_growth WHEN 1 THEN ' [autogrowth by percent, BAD setting!]' ELSE '' END

FROM sys.database_files A LEFT JOIN sys.filegroups fg ON A.data_space_id = fg.data_space_id

order by A.TYPE desc, A.NAME;The results:

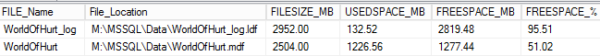

Well, that’s not good! Our 2,504MB data file has 1,277MB of free space in it, so it’s 51% empty! So let’s say we want to reclaim that space by shrinking the data file back down:

Let’s use DBCC SHRINKDATABASE to reclaim the empty space.

Run this command:

DBCC SHRINKDATABASE(WorldOfHurt, 1);

And it’ll reorganize the pages in the WorldOfHurt to leave just 1% free space. (You could even go with 0% if you want.) Then rerun the above free-space query again to see how the shrink worked:

Woohoo! The data file is now down to 1,239MB, and only has 0.98% free space left. The log file is down to 24MB, too. All wine and roses, right?

But now check fragmentation.

Using the same query from earlier:

SELECT * FROM sys.dm_db_index_physical_stats

(DB_ID(N'WorldOfHurt'), OBJECT_ID(N'dbo.Messages'), NULL, NULL , 'DETAILED');And the results:

Suddenly, we have a new problem: our table is 98% fragmented. To do its work, SHRINKDATABASE goes to the end of the file, picks up pages there, and moves them to the first open available spaces in our data file. Remember how our object used to be perfectly in order? Well, now it’s in reverse order because SQL Server took the stuff at the end and put it at the beginning.

So what do you do to fix this? I’m going to hazard a guess that you have a nightly job set up to reorganize or rebuild indexes when they get heavily fragmented. In this case, with 98% fragmentation, I bet you’re going to want to rebuild that index.

Guess what happens when you rebuild the index?

SQL Server needs enough empty space in the database to build an entirely new copy of the index, so it’s going to:

- Grow the data file out

- Use that space to build the new copy of our index

- Drop the old copy of our index, leaving a bunch of unused space in the file

Let’s prove it – rebuild the index and check fragmentation:

ALTER INDEX IX ON dbo.Messages REBUILD;

GO

SELECT * FROM sys.dm_db_index_physical_stats

(DB_ID(N'WorldOfHurt'), OBJECT_ID(N'dbo.Messages'), NULL, NULL , 'DETAILED');

GOOur index is now perfectly defragmented:

But we’re right back to having a bunch of free space in the database:

Shrinking databases and rebuilding indexes is a vicious cycle.

You have high fragmentation, so you rebuild your indexes.

Which leaves a lot of empty space around, so you shrink your database.

Which causes high fragmentation, so you rebuild your indexes, which grows the databases right back out and leaves empty space again, and the cycle keeps perpetuating itself.

Break the cycle. Stop doing things that cause performance problems rather than fixing ’em. If your databases have some empty space in ’em, that’s fine – SQL Server will probably need that space again for regular operations like index rebuilds.

Erik Says: Sure, you can tell your rebuild to sort in tempdb, but considering one of our most popular posts is about shrinking tempdb, you’re not doing much better in that department either.